Tutorial on Concept extraction

(Still and early draft)

November 15, 2003

Software and Fable ORB available free as a download

http://www.ontologystream.com/cA/tutorials/download/fableORB.zip

The tutorial is self-contained. However there are additional tutorials available.

Contents

Section 1: Some screen shots of the fable ORB

Section 2: Categories of Subject Matter Indicators (SM-Indicators)

Section 3: The Fable Card

Deck, construction issues

Additional resources are available on a precursor to this study of the linguistic variation within the fables. This study was on 24 documents, having 3900 sentences, that are FCC public documents. The work is described in the Taxonomy Research Notes, and a SLIP project is downloadable. A card deck was extracted manually to produce a descriptive enumeration of the thematic structure of these 24 documents.

As we will see, the results we are obtaining are interesting and perhaps better than many or most other concept extraction technologies; but we still have issues with completeness and fidelity.

The current work will reproduce the work process but apply this process to the 312 Aesop fables. This collection has been the central test collection used by OntologyStream and the BCNGroup.org for testing and evaluating text understanding algorithms.

The nest steps are new for OntologyStream Inc. These steps are first publicly described in a letter to one of the related patent holders.

The architecture for the Ontology Referential Base is not published as yet, simply because it is not quite finished and the documentation has to be developed. We anticipate doing this so that the ORB fundamentals are public. (This sentence will be replaced with a link to documentation when Nathan Einwechter completes it. )

Section

1: Some screen shots of the fable ORB

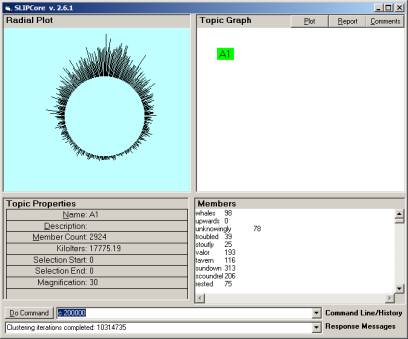

Figure 1.1: The initial clustering of 2924 atoms

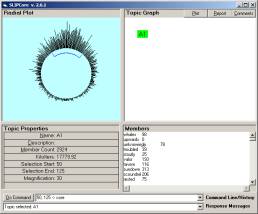

Figure 1.2: Taking the central part, the core, into a subcategory

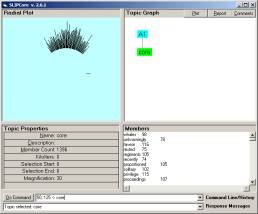

Figure 1.3: Taking the tail (cluster?) and the “residue” into two additional subcategories

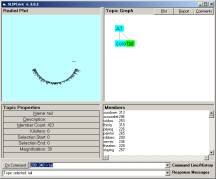

Figure 1.4: Randomly scattering the residue on the circle and then clustering

Figure 1.5: Selecting a subgroup and a (new) residue

Figure 1.6: Selecting a small cluster, and then finding the prime

Figure 1.7: A prime compound is identified

Figure 1.8: the side and prime categories are deleted and a different part of the “spectrum” looked at

Section

2: Categories of Subject Matter Indicators (SM-Indicators)

Our interest is in autonomously creating a descriptive enumeration of SM-Indicators. Our principled position is that humans are really needed to make judgments about the fidelity of correspondence between SM-Indicators and the subjects being indicated.

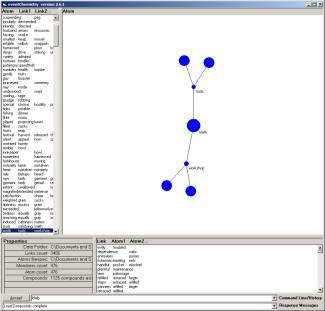

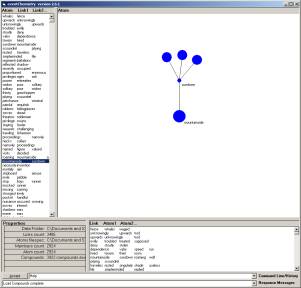

The figure below is an example of the SM-Indicator, having the form of a subject center and a neighborhood in linguistic variation space.

Figure 2.1: SM-Indicator with “smith” as center and “tools”, “workshop” as a two-element neighborhood

However, we have a process that can be made more powerful over time. The current process starts with a measurement of the linguistic variation in text using a simple work-level 5-gram.

The measurement is completed after our use of a Visual Text text-analyzer, hand constructed to find sentence boundaries in the collection of 312 fables. The text-analyzer was developed using the IDE purchased from Text Analysis International Corporation .

In early 2003 OntologyStream did some basic research on the CCM model that has been used in the product called, NdCore, by Applied Technical Systems Inc. This research was instrumental in generalizing several aspects of our previous work on categoricalAbstraction (cA) and eventChemistry (eC).

One of the generalizations recognizes that errors are made when on produces a tree branch from the 5-gram. The effect of the NdCore measurement is to treat the words next to a center word as being more significant that the word two words away form the center word. It is treated as if there is a broad-term/narrow-term relationship between the center and the words next to the center, and the words next to the center and the words one word removed from the center.

We weaken the representation of meaning as related to nearness in sentences by treating the 5-gram as a center word and the other elements of the 5-gram as being simply four elements of a set. The set is then a neighborhood with all of the four elements being treated equally. The mistake made by NdCore measurement is not made. As a reification process, later on, we then apply an ambiguation/dis-ambiguation method using a similarity/dis-similarity ontology.

The generalized n-gram and the generalFramework theory can be used to make this measurement and encoding process made more powerful over time.

The ambiguation/dis-ambiguation methodology has to involve humans who are manipulating a controlled vocabulary design to find terms and phrases that are semantically similar, and to separate ambiguated neighborhoods. The ambiguation/dis-ambiguation methodology has to involve that measurement and encoding process that we call the Actionable Intelligence Process Model, and which is derived from work done, by OntologyStream Inc, on the Total Information Awareness project at DARPA.

Section 3: The Fable Card Deck,

construction issues

One should review the notions surrounding the year 2000 presentation to an e-Gov conference on the method of Descriptive Enumeration (DE).

The method of descriptive enumeration has two core concepts related to issues also present in the foundations of mathematics and logic. These are completeness and consistency.

In logical positivism, consistency can be taken to depend on independence of underlying axioms, since of axioms as not independent there are entanglement issues that can lead to in-consistency. Logical positivism is a version of scientific and mathematical reductionism. The knowledge sciences must challenge carefully reductionism and show that it is a form of fundamentalism. In any case, one has to understand that reconciliation of terminology is necessary for highly efficient interacting communities of practice.

The necessity of reconciliation of terminology is why we have the concept of a controlled vocabulary. This vocabulary must admit the ambiguity that is essential to the use of natural language. This essential ambiguity requires that text understanding not be strongly reductionist, as defined by the traditions of the current government funded paradigms in science.

OK, so the problem that we are facing is one that we claim cannot be faced squarely using a dogma that requires meaning to be reducible un-ambiguously to knowledge representations such as the Cyc Corp upper ontologies.

The fables are a small collection, having around 3000 sentences. After we use a stop word list we pass the 5-gram over the ‘reduced” sentences to produce an Input Bag of Branches (IBB) each having a center and zero to four elements in the center’s neighborhood. We then make a global convolution that produces the Output Set of Neighborhoods (OSN). The global convolution is over the IBB, and local convolutions start at the ends of the branches and gather all ends having the same string value into a simple tree – as in the NdCore process. This gathering is achieved using an In-Memory Referential Base, derived from, but being different from, the Primentia patent. But the tree is then fractured so that the tree root node becomes the center of a neighborhood having all elements in the branches being equal-distant from this center.

The OSN is then parsed to produce an input file, called “datawh.txt” for the SLIP browsers, so that we can see the local constructions within a virtual rendering of the entire “semantic web”. Our problem is now to reify these local constructions, given that we know we have made several mistakes in joining term occurrence that are really in different contexts, as in the bush(1)/bush(2) case. We also have not applied a thesaurus ring as is done when one has linguistic and ontology services. These problems can make the OSN better, but does not eliminate that need for human inspection and reification processes.

In any case, a single OSN will have many more SM-Indicators that one wishes to use in a controlled vocabulary management system – like SchemaLogic’s SchemaServer.

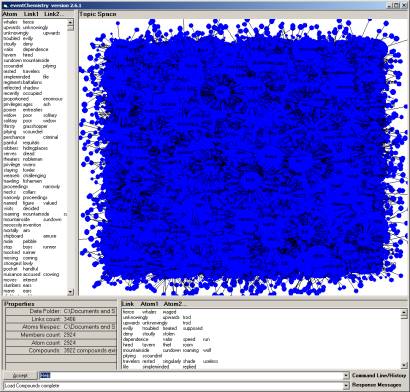

Figure 3.1: The random projection of all local SM-Indicator neighborhoods into a single space

Figure 3.2: One of the 3406 SM-Indicator neighborhoods for the Fables