Saturday, August 21, 2004

Stratified

Ontology

We walk carefully here because many individuals do not have an intuition about what dedicated scientists are talking about and will get lost fast.

As we develop issues related to a descriptive enumeration of patterns and components of patterns, it is necessary to be guided by a literature where general systems theory and natural science brings an understanding of those elements of nature that are complex. But if those who make evaluations of new approaches in information science are not informed by the necessary literature, then the Nation cannot overcome several decades of poor decisions in information science and funding support. It is hard to admit institutional mistakes but perhaps we, as a society, must acknowledge that the way information technology was developed has lead to the non-interoperability of databases and the re-enforcement of stove piping.

The Nation needs a K-12 curriculum in the science of knowledge systems. But we have to deal with the current day realities. Many of those who are successful in the consulting industry are successful because of business practices that are not in line with truth seeking. These practices are about making money and if the truth is an issue in conflict with profit motives then truth is often methodologically marginalized. Certainly other industries, such as tobacco and gambling, have arisen where the evidence from objective social science is that pure profit motives has dominated moral considerations.

We need both a consulting industry and a general population that is comfortable with the types of processes that are involved in truth seeking. We need a population that will not allow the type of indiscretion that has occurred in information science funding. But our curriculums are not preparing students to understand, because the current educational system is too tightly coupled with entertainment or business.

So, having set the stage for some analysis of methods that are not likely to be understood by the typical MBA, we return to this analysis.

An argument is being made that structural linkages, however measured, are structural, and that structure/function knowledge is to be acquired only when at least some of the structure has been measured. Computer encoding into Orb and data mining processes over Orbs allow a precise measurement of structure. The structure is dependant on the measurement processes, and this is why ClearForest type technology is important, and why one needs Text Analysis International Corporation type parsers. Yes, there is a bootstrapping that involves making ontological commitments as part of the measurement process, but this is addressed within the actionable intelligence process model.

As structure/function knowledge becomes involved, through some type of (human) empirical observation, then a specific theory of types also evolves and become a theory of meaning at one point. The evolution of these theories of meaning need to be governed not by the money that is to be made in an immediate future but by some objective criterion of truth that is often far more complex than is recognized within the current cultural focus on entertainment or business.

It is conjectured that Acquired Learning Disability (ALD) develops and is reinforced by the surface nature of curriculum in mathematics and science. There is little social expectation that most students will become knowledgeable about deep questions related to the limitation of Hilbert mathematics, or the quantum mechanical aspect to perception and awareness. But discussions with young people find a desperate need to engage in these types of questions. A type of counter culture exists that has become wise to the establishment and re-enforcement of ALD. The slow and poor process of imposing a poorly received curriculum in mathematics produced ALD. As a result, adult intellectual background is absent the elements needed to understand how leading scholars have addressed these questions. The kids are aware of this, but as yet there is little that anyone can do about the problem. We first have to acknowledge the presence of ALD, in order to treat the cultural roots of this self imposed limitation.

Organizational stratification of the physical processes involved in memory, perception, awareness, cognition and anticipation is one of these essential elements. This stratification is what the peer review community rejects and what program managers do not understand.

In Readware’s product, there are two sets of "acquired informational resources". But there is a generalizable methodology here also. We call the two acquired informational resources by the name “Referential Information Bases” (or RIBs) but without talking about the issue of encoding or search. The Hilbert encoding optimizes the solution of the set membership question, and will discuss this at length in a separate bead [83].

One Rib is the set of substructural relationship(s) between CATEGORIES of words that are indicated, without making any distinction between elements of the category, with a three-letter string. These are structural and ARE n-aries,

< r, a(1), . . . , a(n) >

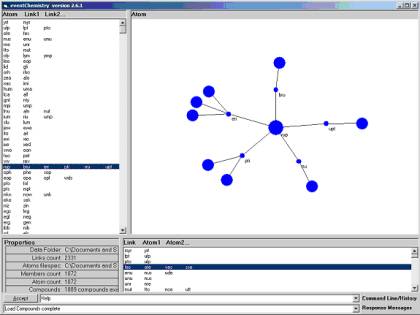

Each node in the n-ary has the same "relationship" to the relationship r and is therefore expressed completely as a set of 2-aries. There is no global information other than the fact that if I “convolve” the set into a graph I get a n-ary, as in the following picture:

A “subject matter indicator neighborhood”

over a set of Readware substructural categories

Each one of these nodes is a categorical abstraction (cA) atom based on some specific and well-defined measure of invariance. The fact that n nodes are in a relationship might be important - and if the measurement is complete WOULD be important, as would some theory of relationship that differentiates from one node to another, i. e. , the r in the < r, a(1), . . . , a(n) > has a different facet if considering only one of the nodes.

But the n-ary is a compound shaped by function in a specific environment, so Ballard is completely right in regards to the preference for n-aries. The issue is reification of the function that these compounds might play. Ballard’s Mark 3 knowledge base system has a process for doing this, and this process is dependant on his encoding mechanisms and human work.

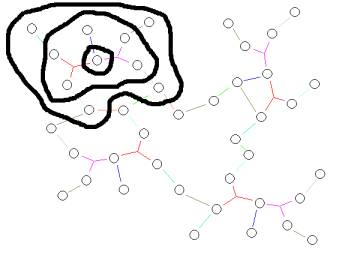

Because the “structural” n-aries are mixed from different contexts, a human perhaps aided by algorithmic data mining processes examines the “measured” n-aries. This examination is a reification process that “separates” subject matter indicator neighborhoods so that the chemistry of semantic compounds can be identified.

Subject matter indicator neighborhoods

The theoretical conjecture that ReadWare, and other substructural methods, depends on is that the semantic compound derived from substructural analysis identifies signatures that are independent of the actual words used. Evidence for this method is found in cyber security where patterns of bits in computer code are measured and basins of attraction are found in “substructural bit space”. These basins of attraction are pointing at functional properties of code. The set of substructural invariance is the implicit dimensions to the mathematical, Hilbert, space in which the basins of attraction sit. A general theory for this is described in Chapter One of my on-line book.

The second Rib is a human concept level set of n-aries. Here the concept-indicators are produced in a formative fashion.

I point out that there is something in common about the specifics of Readware’s substructural Rib and in the Applied Technical Systems CCM patent (see Orb notational paper). What is in common is not something that business process will be happy to talk about, since this commonality reduces the competitive advantage that business people need practice the type of economics that we have institutionalized in the information technology sector. This commonality is what the Knowledge Sharing Foundation would reveal as part of university-based curriculum.

The economic wealth that would be generated by Knowledge Sharing Foundation justifies an expenditure of 60 – 200 million dollars by the Congress in the National Project to Establish the Knowledge Sciences.

If a very focused target of investigation, for example all CEO discussions in major corporations or all weblogs in a group of weblogs, was made one would develop a substructural Rib that was specific to understanding that part of the social discourse. The same can be done with all public discussions about genetic engineering and pharmaceutical framing. These different domains of discourse have slightly different but "semantically" significant small structural differences. This is shown in the ClearForest technology.

The BCNGroup Glass bead Games is designed to stand up topic maps, using subject matter indicators that can be used to navigate into real time discussions about various things. Of course, this might be more interesting than prime time television, and certainly would displace the current television based excessive advertising expenditures. Businesses are already exploring the use of real time analysis of social discourse as a means to sell product in a more targeted fashion. (notice the funding pattern at In-Q-Tel)

The invariance of the substructure is observed, not derived from a theory. This is why computer data mining processes sets up the reification of formative ontology. The human is needed to make the reification, and the reification is ultimately dependant on the human’s tacit awareness in a present moment.

The classical example of the stability of the substructure is the stability of the categories of atoms, as seen in the physical chemistry periodic table. (There is more to say here.)

It is useful to say that a "semantic envelop is created" from the purely computer based data mining for the stratified linkages. Bringing the data into a temporal window in order to catch the nuances that are highly dynamics and change over time, sometimes abruptly, can refine the envelope. In 1995 and 1996, I developed and published formal structure to measure the statistical properties so that shifts in the process compartment can be measured, and allow one to know when the current formative ontology substructure is no longer relevant. This work has not found a way to be used and has not found a way to be published. It is my fault; I am just not good at getting the system to work for me. But the work might be important?

When the semantic envelopes are mapping well to the functional expressions of real time events, then the process compartments in the computer modeling and in the real world align. The substructure becomes highly predictive of function, even if the structural measurement is picking up novel patterns. The system has become “anticipatory”.

The anticipatory response is used in a mechanical way to draw out the unique stratified structure and this unique structure is then used to create a "plausible" interpretation of the unique textual occurrences. This is HIP (Human-centric Information Production) using stratified ontology and a formative process in real time.

Paul