eventChemistry ™ .

Verb Map Analysis using

SLIP,

Tutorial and Design Document

by, Paul Prueitt, PhD

December 30, 2001

Section 1: On linguistics and experimental research on human memory

We start this tutorial with the observation that the origin of verbs is quite different from the origin of nouns. We are interested in a discussion on this point, but we also recognize that the problem is quite difficult. It is not so easy to seen a clear difference in the origin of verbs and the origin of nouns by a surface reading of the linguistic literatures. Perhaps the problem of the origins of nouns and verbs is related to the notions of declarative and procedural knowledge. We are more familiar with this literature, and will try to make our thoughts clear using this literature.

John Sowa often starts with a distinction between declarative knowledge and procedural knowledge. His work is foundational to much of the machine ontology work that has been done by the artificial intelligence community and the information technology community. In general terms, he points out that a paradigm of declarative knowledge constructs has largely failed to produce human like cognitive processing in computers. Sowa and others turn to a paradigm of what they call procedural knowledge constructs. But it is not always clear what is meant by “procedural knowledge construct”.

In the

first two paragraphs of the Introduction of Sowa’s 1997 book “Knowledge Representations”

he spells out a certain intellectual position as follows:

“Like Socrates, knowledge

engineers and systems analysts play the role of midwife in bringing knowledge

forth and making it explicit. They display the implicit knowledge about a

subject in a form that programmers can encode in algorithms and data

structures. In the programs themselves, the link to the original knowledge is

only mentioned in comments, which the computer cannot understand. To make the

hidden knowledge accessible to the computer, knowledge-based systems and

object-oriented systems are built around declarative languages whose form of

expression is closer to human languages. Such systems help the programmers and

knowledge engineers reflect on "the treasures contained in the knowledge"

and express it in a form that both the humans and the computers can understand.

“Knowledge representation

developed as a branch of artificial intelligence -- the science of

designing computer systems to perform tasks that would normally require human

intelligence. But today, advanced systems everywhere are performing tasks that

used to require human intelligence: information retrieval, stock-market

trading, resource allocation, circuit design, virtual reality, speech

recognition, and machine translation. As a result, the AI design techniques

have converged with techniques from other fields, especially database and

object-oriented systems. This book is a general textbook of knowledge-base

analysis and design, intended for anyone whose job is to analyze knowledge

about the real world and map it to a computable form. “

But the assumptions and claims made here seem far away from experimental cognitive neuroscience literature.

Sowa’s stated objective is to “express knowledge in a form that both the humans and the computers can understand”. Our question about this objective is whether or not the objective can be perused in a way the eventually leads to a reconciliation of the computer technology with the experimental work from cognitive science.

A more realist goal might be to develop scholarship on process models and data structures that produce some type of “sign” system and control interface using this sign system. Clearly noun type topics can form a backbone to this system, but we need to define process models that create these topic-maps as an emergent outcome. The sign system would then be “held out” by the computational processes to be interpreted (known) by humans. We could then treat the machine as a machine, rather than acting like the machine and the living system is the same category of thing.

It is obvious that that are many different approaches to declarative and procedural knowledge, and many schools of thought. However, we identify our bias as being more or less developed in the terms developed by certain cognitive science schools, particularly as expressed in Schacter and Tulving’s edited volume “Memory Systems 1994”, which was published in 1994 by MIT Press.

“Let us now consider the distinction between “procedural” and ‘declarative” information storage, which has been applied to human amnesia as well as to the animal domain and which enjoys a certain amount of attention at present.

“Historically, this approach has suffered from a lack of clear definition of just what constitutes each type of learning. There has been some evolution in this program in recent years, involving a deeper understanding of the diverse memory systems and processes covered by the term “procedural”. This lead some researchers to abandon the term “procedural” in favor of a new term, “nondeclarative”. This new label hardly helps one distinguish between the two systems in a principled fashion. In general, one understands the distinction between declarative and nondeclarative only because it says pretty much what all the other dichotomies say: some systems are involved with stimulus-response or habit like learning; others are involved with “cognitive” learning. “ Lynn Nadel – page 48, Memory Systems 1994.

Our bias is that multiple memory systems exist and that “memory of language” is shaped by the neurophysiology of human memory production. So the distinctions made in the chapters of “Memory Systems 1994” should be seen in any operational theory of human knowledge.

Of course, no such “operational theory” currently exists largely, we claim, due to poverty of philosophical models of human thought. The philosophical grounding of KM in ancient mind games must be challenged based on the fact that these philosophical grounding do not account for modern experimental work on human memory, awareness and anticipation (see for example: Prueitt, 1997). They are dead paradigms that endlessly talk about illusions, such as Karl Popper’s three worlds. These philosophical frameworks from ancient times are rooted in a folk psychology (Paul Churchland) where solutions to un-solvable problems occupy the minds of wasteful fools.

The claims by technologists about computer understanding also does not seem to help us unify cognitive science and knowledge engineering. What is wrong here is that the claim,

“that a machine can have the cognitive properties of biological systems”,

is a claim supported by economic investment but not experimental science. The claim is an advertisement for information technology and artificial intelligence, but there is no substance to what is claimed as results. Quoting Sowa’s Introduction again,

“But today, advanced systems everywhere are performing tasks that used to require human intelligence: information retrieval, stock-market trading, resource allocation, circuit design, virtual reality, speech recognition, and machine translation.”

But “speech recognition by a computer program” is not even of the same category of process as “speech recognition by a human being”. The computer program is formalism. It is an artificial system. It exists in an un-natural way. The human being has processes that are stratified in physical space where quantum mechanical fluctuation, co-existing somehow with the metabolic processes, give rise to something that we can only call “awareness” but can not fully explain.

We simply have build a large and very important industry based on a deception, mostly a self-deception. At one point, one must agree with Roger Penrose that the “Emperor has no cloths” (The Emperor’s nNw Cloths, 1992, Penrose.)

Perhaps we have to by-pass the literature on knowledge management and even the literature on philosophical notions about “how thought follows”. Clearly, cognitive science is about the phenomenon of human knowledge. How can we as a society maintain such a deep gap between technologists (who get quite of bit of economic support for their work) and cognitive science (who as yet have not been able to bring the science to the technology)?

Why is this important to our society? The answer is suggested by looking at the confusion that exists in the business and information technology communities regarding the nature of individual knowledge and knowledge sharing in human communities.

Stepping beyond the business and information technology literature is not so easy. The difficulty is partially in making this step correctly, and partially due to the opposition that part of the business and information technology communities will subject the new theory to. The strategy we have adopted is to appeal not so much to the authority of experimental literature but to appeal to direct reasoning and ultimately to the performance of eventChemistry software. The grounding of our theory in cognitive neuroscience, stratified theory, general systems theory and linguistics is not to justify the theory but to lend evidence when we feel that this is proper to do so.

What are we seeking?

We seek to build new technology that provides SenseMaking environments where computer processes facilitate all aspects of social and private investigations about (1) natural systems via instrumentation and (2) artificial worlds such as the Internet.

We ask the reader to reflect on how reasonable it seems to equate nouns with declarative knowledge and verbs with procedural knowledge. The purpose of this reflection will become apparent quickly. There is a certain appeal to the notion that nouns are derived from declarative knowledge, and verbs are derived from procedural knowledge. I declare that “that” is a “tree”. The procedure is to “run” home and get the book.

The observation that nouns and verbs have a different origin can be supported by a direct appeal to one’s own experience. Nouns are about things that exist. Verbs are not. One can point to a tree as an instance of the word tree. But can one point to a “running” or a “run”. What stratified theory suggests (at least to us) is that nouns are declarative-like and verbs are procedural like.

The point that will be picked up again in a bit is that human memory research, with some great difficulty, wants to identify a difference between habit responses and responses that are mediated by a cognitive facility. What this means for knowledge management theory is even more difficult to say given the state of the sciences. A quote from “ Memory Systems 1994” will help make this point.

According to Cohen, Eichenbaum, and colleagues, declarative memory rests on a system of relational representations and by virtue of this conveys to behavior the ability to utilize memory to behave adaptively in novel situations. Procedural memory, by contrast, is represented in systems that are dedicated and inflexible.

But what has this to do with nouns and verbs? What appeared to be a proper distinction based on linguistics does not (on the surface) appear to be proper at all if we base our thought on memory research. Why does there seem to be such great differences between the first major dichotomy in the parts of speech and in the types of memory systems?

Can we think about both linguistics and cognitive science at the same time?

The answer to this question is conditional and conjectural. We conjecture that the answer will always be “no” if we treat the world as reducible to a common single organizational reality. We need stratification theory to account for complexity in the natural world. Issues like individual viewpoint, cultural viewpoint, translatability problems (Benjamin Whorf), and formative cause; are all issues that require a stratified theory.

The development of SenseMaking environments

We have suggested that distinctions experimentally conjectured from the study of human memory systems, also should exist in the new science on knowledge systems. This principle of multiple knowledge systems might apply in both individual knowledge and social knowledge (or knowledge sharing that occurs in communities.) We need not hold this as a strong claim, but one that is accepted for the time being. We suggest that there are two specific problems related to the development of machine ontology that might be leveraged by a principle of multiple knowledge systems.

1) The problem of individual verses collective knowledge

2) The problem of a difference between “declarative” knowledge and “procedural knowledge”.

The problem of individual verses collective knowledge might be framed in a stratified framework where the individual has knowledge experience that is essential to having knowledge, but is an experience that is not accessible to anyone else. Collective knowledge would appear to depend on the expression of many individual experiences. Natural language would be one avenue that can be seen to measure this expression.

So individual knowledge would exist at one level of organization (within the mind-system of the individual. Collective knowledge then exists within the organizational level of the social unit. The interface would then be complex in the formal sense established by Peter Kugler’s review of Robert Rosen’s work on category theory and biomathematics.

Orthogonal to the problem of individual verses collective knowledge we might find the problem of declarative verses procedural knowledge.

The social use of language is reinforced outside of the mere

existence of one individual, so yes any word must have an abstract nature that

appears universal and thus useable in social expression.

We can now make a tri-level machine architecture that helps us out in producing real synthetic intelligence. This architecture produces and use a stratified taxonomy with organizational level at the bit stream, the intrusion detection system (IDS) level, the Incident management level, and the policy level. Each level except the bottom and the top have formative ontology based on interactions between the level below and the level above.

This following the tri-level model of human awareness based on human memory research, research on the perception system and research on anticipatory responses by biological system. In the artificial worlds (like the world of financial transactions and cyber warfare) memory is the data invariance as measured by Service Link analysis, Iterated scatter-gather, and Parcelation (SLIP).

Here are the essential questions.

1) In

the fable

collection, the Verb-maps seem to be a better semantic linkage that the

Noun-maps. Is this due to an important difference between verbs and nouns?

2) We are considering the development of a metaphor between linguistics and eventChemistry related to incident management and intrusion detection. Is this metaphor as powerful as we now suspect that it is?

In any case, the real world is filled with patterns and also new types of events. The structure of causes change (as we experience time) and things that where once not possible become possible (not because the possibility existed and was not being used but because a non-existent possibility can into existence via emergence). For example, the Universal Plug and Play service provided by Microsoft in the XP operating system changes the landscape for serious hacker/cracker activity.

It is as if the Airline companies allowed all terrorists everywhere free passage to an airport filled with fully loaded commercial aircraft, removed the FAA tracking capability, and then management left for a vacation, or the Christmas break. The other structural constraint is the fact that few terrorists know how to fly these planes. So we have some time to prepare for the XP Universal Plug and Play service hacker/cracker activity and hope that groups in China and elsewhere do not feel a need to harm the Western economic system.

We conjecture now that we need the SLIP as a front end to an ontology constructor for encoding the Intrusion Detection System (IDS) data invariance found by SLIP and reified by CERT analysts. There are several systems that we are evaluating. Our questions are in regard to whether knowledge systems "about" intrusions and incidents can be developed using his "non-linguistic" approach to building tokens based merely in data invariance. We believe that this will be easer than anyone can imagine now, given the huge amount of work already completed.

The data, and a tutorial on using SLIP

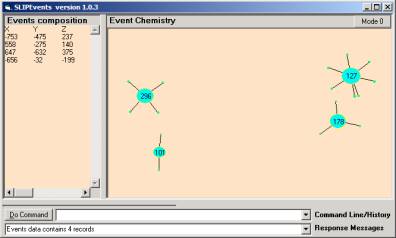

In Figure 1b, we show a SLIP Framework developed to identify small event compounds in the fable collection. The conjecture in Figure 1a we create a non-specific relationship between verbs that are shared by a fable. The event atoms in Figure 1c are verbs with valances the names of fables (indicated by a number)

a b

c

Figure 1: The three OSI Browsers

Clutched:

25

Attacked: 130, 14, 181, 25, 263, 302, 32

Table 1: Four verb atoms and valances

By clicking on the hyperlinks one can see the fable referenced by the number.

Figure 2: The event compound for Figure 1c

Figure 2 simply organizes the data derived from a parsing program. Several things can be noticed. First, a relationship between the verb attacked and the verbs clutched, spared and hired. The hyperlinks in Table 1 allow one to check to see why there is this relationship. It is a simple relationship and not much meaningful information seems to be acquired.

But what we have is two things of great significance

1) The relationships indicated by the event compound are identified structurally so that a computer program can in fact use these relationships to search of other occurrences of this relationship. In the computer Incident Management and Intrusion Detection (IMID) applications, this is of great importance because event structured that are identified by humans as being important can be quickly tuned into IDS rules.

2) The simple co-occurrence of verbs, within fables, is visualized first as the conjecture and then as clusters of verbs. These clusters may lead to a theory of verb use by Aesop in the fables.

We can flip the conjecture to organize fable by verb co-occurrence. When is a specific verb used in more than one fable?

Figure 3: Verbs inducing an non-specific relationship between fables

296: feeling, lived, put,

went

178: passed, replied, walk

127: caught, come,

entreated, lived, pray, put, replied

101: help, replied

Table 2: Four fable atoms and valances

Figure 4: The random scatter of fable atoms in the Event Browser

On Figure 4 we have the four fable atoms for a small cluster. These are randomly scattering into an objectSpace where various types of eventChemistry transforms can be made. Work on these transforms is still in progress.