Note3 Back to Home See the software

Mediation: Conflict Between Cultures

Taxonomy

Note 4: Subject Matter Indicator Neighborhoods

Tuesday, November

18, 2003

A member of the BCNGroup asked the question

"BTW that was an interesting piece of software on fairytales--what does

it do ?"

The software architecture is being described in tutorials.

However, we can make a short description, here as an outline.

The software is at:

http://www.ontologystream.com/admin/temp/fableAtoms.zip

and several tutorials on

the software is at:

http://www.ontologystream.com/cA/index.htm

The first step, in our procedure, is to identify sentence boundaries

using an autonomous, machine only, algorithm. This step will miss some

sentences - but the intent is to find patterns that occur more than once.

So losing some patterns, or parts of patterns, is acceptable. If the sentence structure is good always

then no information is lost.

What do we mean by “a pattern”?

To understand the nature of patterns in linguistic variation this

question has to be personally reflected on for some time. The understanding of natural language

expression by humans has to be the focus of the reflection.

A useful meditation is “What is the boundary of a

concept?”

In our present invention, we remove from each sentence all occurrences

of words that often do not indicate context by themselves. Again, there are some human judgment that is

required to do this properly, and the resulting “controlled vocabulary” needs

to be reviewed by editors and libraries periodically.

We are making the assumption, here, that the stop words are part of the

background structure of language. Words like "a" and

"the" and "from".

Of course, the removal of stop words makes a mistake but also provides a

benefit in that the patterns we are now looking for are uniformly

simplified. So this complex issue has

to be understood by users and by those who have system maintenance

responsibilities.

The structure of natural language reflects the re-occurrence of subject

matter in social discourse, addressed by the participants of the social

discourse. Our process simplifies the

patterns using category theory. The simplified patterns have a one to one

correspondence to the real world (external to the linguistic variation is

natural language expression). This

assumption is an interesting one when one looks at memetic theory as discussed

by Susan Blackmore.

The memetic theory suggests that the patterns of expression are

behavioral and habit like and thus that the invariance of patterns of

co-occurrence should stand up to radical simplifications. The patterns are fractal in expression and

measurement, see;

http://www.ontologystream.com/aSLIP/index1.htm

The simplication allows us humans to look at the collection of all

patterns in a text collection, whether small or large.

We do this with a visualization tool like what Entrieva has or what one

can do with the SLIP browsers.

It is important to note that the methodology appears to work as well

for small collections as for large one.

Being able to address small collections is a competitive advantage in

the current marketplace dominated by statistical methods.

All words are useful in speaking/writing and understanding what is

spoken/read.

But the fine structure of natural language

is more complex than what the commercial markets have so far

accommodated.

Example: Oracle

purchased a high quality text analyzer with both deep case grammar analysis and

ontology services in 1989, and then in over a decade of marketing-mind type

influences, historically documented in a history of Oracle, reduced this fine

tool set to word statistical analysis. But the markets were not selecting

the best tool for concept extraction. The market was busy with short-term

profits. Again, this is one example

over a general problem with market economies driving scientific inquiry. Society obtains a poor scientific result

due to the shallowness of the business thinking. Society loses value because our market economy does not always

recognize the theorems of Nash on non-equilibrium market stabilities. (This is

an observation, not a political statement.)

Today, the OntologyStream team is in a position to develop high quality

deep case grammar text analysis using the Visual Text developer's environment

so that the rules become situational. Visual Text International is part

of the SAIC-OntologyStream team as reflected in our DARPA submission.

http://www.ontologystream.com/area2/KSF/KnowledgeScience.htm

Our team is anticipating a strongly funded project managed by SAIC to

rapidly explore and deploy the technology that we have access to. Word on this funding is expected to arrive

this month (November).

But textual mark-up of terms or autonomous groupings of terms is only

part of the task. One has to re-represent the knowledge representation in

such a way that the re-representation is evocative of knowledge experience when

viewed by a human. The re-representation

might also allow some machine manipulation of the knowledge representation by

using the techniques of metaphoric analysis with cognitive graphs (John Sowa)

and other similarity analysis (such as work I did for NSA, declassification

methodology, in the mid 1990s).

http://www.bcngroup.org/area3/manhattan/sindex.htm

One can also talk about plausible reasoning and quasi-axiomatic theory:

Evaluation of

indexing, routing and retrieval technology

But this discussion does really lead us into a deep theory of data

organizational processes.

Subject Matter Indicator neighborhoods are designed to

(1)

Re-represent the

knowledge representation findable in natural language and

(2)

render this

representation in a way that is evocative of the mental events that would occur

as if reading or hearing the natural language.

A more powerful way: Having reviewed the general

nature of the problem, we see that the use of stop works can be substituted for

a more complex process where by a parsing of the text is done to

"extract" the occurrences of parts, or plausible parts, of Subject

Matter Indicators.

We may leave the stop words in so that linguistic and ontology services

can be deployed within one or more of the multi-pass text analyzer modules that

are constructed situationally focused by an objective study of the text

collection. (This is what I am asking

Nathan, with Amnon’s assistance, to focus on.)

(On a practical note: Nathan’s time is being paid for from

OntologyStream overhead, and Amnon is contributing his time as a personal

favor. The result of this, low budget

work, may be a tutorial on the use of the Visual Text IDE as applied to the

development of Subject Matter Indicator neighborhoods. We are focusing on the fable

collection.)

The "art" of entity extraction has become very developed over

the past three years in response to the New War. For example, one could use the tools provided by ClearForest to

mine very large collections of text derived form web harvest.

http://www.bcngroup.org/area2/KnowledgeEcologies.htm

In the case of the two Fable Card Decks:

http://www.ontologystream.com/admin/temp/fableCards.zip

the extraction of Subject Matter Indicator neighborhoods employed some

heuristics such as

1) identification of all well formed sentences

2) removal of a long list of stop words

3) the development of a word level 5-gram

"measurement" of the co-occurrence of significant terms, terms that

are not stop words

4) the application of some principles of

categorical abstraction to produce the abstract neighborhoods around each term

5) the manual removal of some of the 3600

neighborhoods

6) the clustering, using the SLIP tool, of the remaining graph of all neighborhoods

(this graph exists because of a categorical collapse of all occurrence of the

same string into a single abstraction (called a categoricalAbstraction

"atom")

7) the removal, using the SLIP tool, of the highly

connected neighborhoods and these neighborhoods that are sparsely connected

(this is similar to Autonomy's "use" of Shannon informational

principles and Bayesian statistical principles. The techniques are also

used in other concept role-up technology. (There is a discussion here.)

The success of the stated process is seen in the two card decks for the

fables. These two fable card desk are

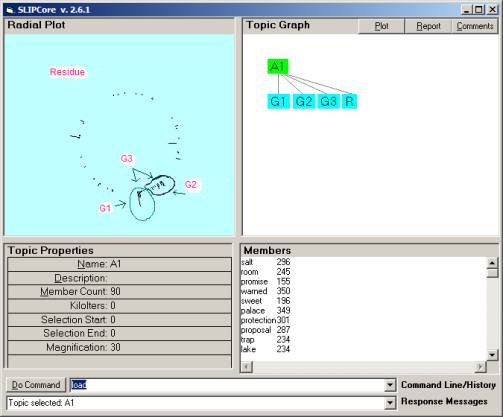

the contents of the two clusters marked in Figure 1 as “G1” and G2”.

Figure 1: Clustering of the center of the distribution of the ORB

We call the graph containing the Subject Matter

Indicator neighborhoods the Ontology Referential Base or ORB. The set of neighborhoods formally define a

topology in the mathematical sense:

The fidelity should improve with a switch from throwing out stop words

to using a deep case grammar methodology to fill-in a generalFramework set of

templates. The deep case grammar

analysis can follow the 1987 Wycal patent that was seen working in the Oracle

ConText produce during the 1990s.

Using the results from generalFramework templates one then develop

co-occurrence information and encoding this information into the graph. Same simple technology using free tools

available from OntologyStream labs.

A discussion of the generalFramework theory is at:

http://www.ontologystream.com/beads/enumeration/gFfoundations.htm

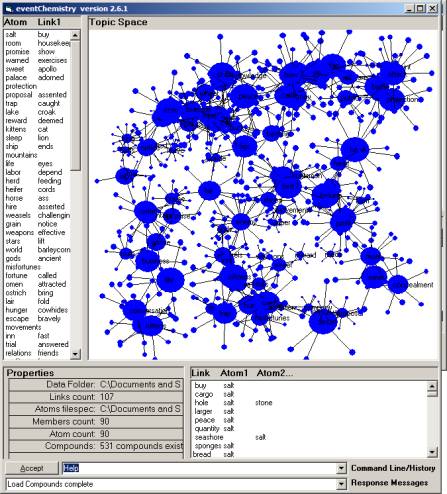

A picture of the random scattering of all neighborhoods in the category

A, in Figure 1, is shown in Figure 2.

Figure 2: The random scattering from 107 neighborhoods.

The category of neighborhoods in the node A1 are

scattering into a observational window that is actually three dimensional. The two decks of cards are the contents of

the two sub-clusters G1 and G2 and contain 28 and 30 elements respectively. A

deck has also been produced for the Residue that contains the remaining

elements of the category AI.

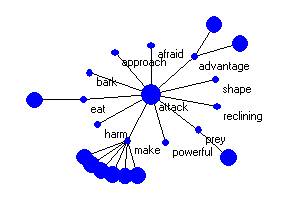

One of the cards is shown in Figure 3:

Figure 3: The “attack” Subject Matter Indicator

neighborhood.

For questions please call me at: 703-981-2676.