Detecting

Events in Computational Space

New product description from OntologyStream

May 23, 2002 Revised

May 29th, 2002

Written by Dr. Paul S.

Prueitt

Executive Summary

OntologyStream offers a fundamental

innovation in methods for Human Information Interaction, a significant

contribution in massive data structuring/organization, and a contribution in

recognizing and using regularity in data structures.

From first principles, our

scientists find that a system for optimal Human Information Interaction can be

best developed that uses categorical abstraction as the atoms of cognitive

analysis. And we find, also from

first principles, that cognitive graph type knowledge representation plays an

important role in formalizing and validating the social knowledge shared within

a virtual community of practice.

Regularity in data structures allows the functional by-pass of, what is

called, “computational complexity” by relying on regularity of structure as

imposed by patterns of expression found in the data.

Synthetic Intelligence (SI), from

machine-human interaction, involves the:

- Generation

of top down expectancy based on opponent processing and frames of

reference

- Generation of bottom-up aggregation of

perceived invariance and patterns.

- The representation and use of regularity

in data structures.

Some qualities from the nature of

cognition can be transferred from cognitive neuroscience research literature,

as expressed by Karl Pribram in “Languages of the Brain” (1971) and “Brain and

Perception” (1991). The resulting

“synthetic” intelligence product has desirable qualities found in the natural

product, and yet is absent some of the un-desirable qualities found in the

natural product.

Synthetic Intelligence (SI)

technology takes computer-based knowledge aids closer to the human

perceptual-linguistic experience.

Scenario: A small set of

unusual event patterns has been identified automatically, as elements of

cA. Profiles on the patterns are

placed into an event library.

According to a set of priority parameters, interpreters pick up the

events and view the event patterns.

Real time human comments are captured using voice to text

algorithms. One event seems

suspicious. As part of a very

natural conveyance of information a virtual team develops to discuss the

patterns. An event ticket is

opened, and a dedicated collaborative system is autonomously brought on

line. The team is able to talk

about the event patterns, and directly share knowledge, in reference to shared

visual indications. A virtual

White Board is used to allow hand drawing to illustrate discussions. A process

of preserving the discussion is present.

The virtual team traces back to the data, and after further inspection

concludes that a novel event has occurred. This event is annotated, including notes on intent and

technique, and the event joins the confirmed library of 30-40 major event types

and 300 minor event types, each representing a family of variants within

meaningful units. The conjectural

causes of the event are noted in annotation. Evidence is acquired early and thus the motivation behind

the causes of the event are recognized and acted on in real time. Learning within the community is

greatly accelerated via a community knowledge base that preserves shared

knowledge within a structured ontology as a cognitive graph (and related

knowledge representational means).

Discussion: OntologyStream

is a new company founded in 2001 on principles shared between Drs. Prueitt, Levine,

Pribram, Kugler, Citkin and Murray and colleagues within an extended science

community. These scientists have

collaborated for over a decade in the areas of natural computing, general

systems theory and on the nature of human action and perception. Their collaboration has also been with

several science communities involved in the definition of what we are calling

the New Computer Science.

It is the focus of these scientists

to

1) Demonstrate experimental cognitive science methodology in a

formal study of Synthetic Intelligence (SI) technology systems.

2) Field a commercially available event detection system.

Visualization of computer data is

often an appropriate way to engage human perception. But visualization of data approaches break down, as data

sources continually get larger. There are other deeper perceptual/behavioral

issues that inhibit the adoption of HII systems.

Successful human and social

learning is the basis for the cultural value to be derived from a cyclic

process of action followed by perception.

This involves humans being immersed in the experience and in the use of

community language. We find that the scholarly literature on Human Information

Interaction (HII) will not be transparent until HII involves the development

and modification of natural language by those who are using SIT systems in

everyday activity. But we also

need machine support to overcome classically understood behavior

characteristics having negative impact on truth seeking.

Humans are enormously capable as

perceptual-language 'machines', but our analytic thinking is often flawed and

simplistic. So how can the

issue of human perception and the issue of cognition be studied, in the context

of critical intelligence gathering and analysis? What about knowledge propagation within communities? How can this be studied?

Experimental cognitive science

methodology can be applied to the study of Human Information Interaction if the

fidelity of the information technology is of sufficient quality. Cognitive graph (ontology) based

decision aids are not of sufficient quality by itself, because there is no

perceptual aspect to system interaction. In most instances the ontology, that is available, is not

formative from a perceptual act.

Without being formative from an act of perception then the information

technology has a radically different and often incompatible nature when

compared with acting and perceiving as part of the human experience of sense.

Within the cognitive neuroscience

literature, images of achievement are said to direct human behavior (see

Pribram’s chapter on this in “Brain and Perception”). But the perception of an external reality is necessary to

tightly coupled action in the world.

When knowledge base systems are

combined with an action-perception based system; then the fidelity may be

sufficient to warrant proper experimental study of the human interaction with

these systems.

The human perception system brings

real time proprieties and priorities and contextualizes an action space. The synthetic perception system extends

human perception into the virtual computational spaces.

Using categoricalAbstraction (cA), the “synthetic

perception” is about categorical invariance in data (structured or natural

language data) and the categorical relationships that exist due to

co-occurrence and pre-established frames (with slots and fillers).

Is this really new?

The key to our work is found in the

perceptual measurement (see the work of Howard Pattie, Peter Kugler and Robert

Shaw.) Synthetic perception looks

into the invariance of data flow using data mining type computing

processes. The data flow is

“measured” with a convolution operator having the form of a conjecture. The found invariance is treated as if

an invariance in the human experience of perception. To our knowledge, there is no other synthetic perceptual

system currently being applied to intelligence problems. The few synthetic

perceptual systems that exist are small-scale laboratory applications meant to

simulate the operation of natural senses, and these are not based on emergent

abstractions from massive flow of real-time data. However, the prior art for this work can be discussed.

The prior art for cA and for the

architecture in which we have embedded cA, is extensive but largely exists in

literatures outside of Artificial Intelligence and Information Technology. This work includes long-term research

efforts in the areas of reflective control, applied semiotics, and second order

cybernetics. Much of the

documentation of this research traces to Former Soviet Union science organized

under special governmental organization (prior to 1991), and has only slowly

been adopted into the intelligence control and machine intelligence literatures

in the United States. Dr. Citkin

is a specialist on this work.

Significant research exists in the ecological psychology literature in the

United States and Europe. Dr.

Kugler is a specialist on this work.

Ours would be the first tool based

on emergent abstractions, of the type proposed, that is intended for analysis

of large data sources.

The event detection system

We have a new technology for event

detection. Very rapid algorithms

select events based on the convolution of a conjectural form

“over” massive data sets. The

algorithms work at about the speed of a data encryption algorithm or a data

compression algorithm.

Various conjectural-forms are

selectable from an engine bank.

The first of these conjectural-forms is based on Russian applied

cybernetics, and was developed (1995 – 1998 by Dr. Prueitt) to encode

similarity relationships within textual and pictorial elements of scanned

images. Drs Kugler and Murray were

involved in this work. Simple link

analysis was the second example of a conjectural-form. Prueitt and Dean Rich

applied this conjectural-form to cyber warfare prototype (2001), now

demonstrated. The software

engineer for our cyber warfare prototype is Don Mitchell.

Formal notation describes this

work.

It is felt that cA can be shown to

have generalized the LSI algorithms into a class of stochastic forms

that act to produce categorical atoms and links designating invariance and

relationship. The notation for

this work is at the URL.

The notation for the voting procedure is at URL.

A general discussion and notation for Russian Quasi Axiomatic Theory is

at URL and URL.

Hierarchical Taxonomy

An event database consists of

levels of taxonomical organization.

Perception acts on outside stimulus and encodes results into the event

database. In the simplest case, distributed instrumentation is placed in the

computational space, such as an image library or a full-text database. Parsing processes are activated

with a rule base. On category of

examples of such process is the category of Intrusion Detection Systems (IDS)

that parse log files and produce log files based on rule firing.

A log file is produced in the form

of a table having two or more columns.

A common input, in the form of this log file, was prototyped in systems

developed by OntologyStream Inc (2001 – 2002). Log files can also be produced from a linguistic parser, as

in a generalized Latent Semantic Indexing. From the log file we produce atoms and relationship

types. Emergent computing (a type

of feature extraction and categorization process) produces event

compounds. These compounds are

then annotated and transferred to a cognitive graph type knowledge base. One is

able to then form compounds of compounds and to trend event occurrences into

models of cause and motivation.

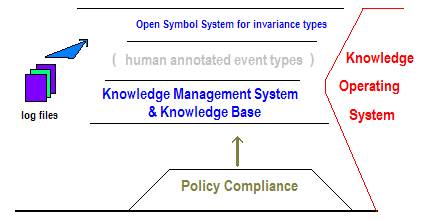

Figure 2: Four levels of

knowledge representation

The bottom layer of the event

database is developed autonomously.

The data stream is “perceived” by the variation of conjectural

convolutions and a cognitive graph type Knowledge Base helps to constrain the

perception into meaningful elements – using frames with slots and fillers as an

intermediate control mechanism.

Humans will be able to modify the perception easily, using voice or

textual commands, and can make interpretations based on visual clues and

remembrances from past experiences.

These features have been accounted for in our existing software.

The synthetic perceptional system

is informed by cognitive influences, in a way that is parallel to what is know

about the human perceptional system.

Specifically, the architecture directly reflects the organizational

stratification in human perception, and the use of convolution (integration

over space and time) as a means to move categorical information from one level

of organization to a higher level of organization. (Memory invariance becomes convolved into direct

perception.)

In both human and synthetic

perception, there is an inner perception and an outer

perception; both having elements of cognition. The substance of synthetic

perception is composed of categorical abstraction produced by a

conjectural-form acting on data. A

substructural process occurs over time and location that is not perceived. In humans, the perception occurs only

as emergent process that directly depends on this un-perceived process.

This is like the massive number of

individual photons passing into the retina. The individual photons cause quantum mechanical events that then

add to a potential that is sampled by dendrites. A series of additional steps cumulates in a physical electro

magnetically guided convolution over the cortical layers of the visual cortex

(see the published works of Pribram.)

A range of adequate computational models of this neuro-physical model

exists (see the work of Juri Kropotov, for example.)

The inner perception of synthetic

perception can be modified, in real time, by human modification; direct or

indirect, of sensor parameters and conjectural forms. What remains constant is that which is being looked at. We have not yet achieved this

software functionality; however, the effect, when achieved, should be real time

“looking at” behavior. It is this

behavior that can be properly studied by our human factors scientists. Drs. Meyers (SAIC) and Murray

(OntologyStream) have provided a technology interface to these scientists and

advises on knowledge engineering issues as these issues arise.

The outer perception comes from a

cognitive interaction with remembrance of past events. This occurs in both the machine and in

the human. In the second layer,

event compounds are rendered visually as simple graphs. A top down expectancy, from the

knowledge base, can be used to structure the graph. This has not been done, but both Adaptive Critic neural

networks (Paul Werbos) and Adaptive Resonance Theory (Stephen Grossberg) are

very well known machine expectancy algorithms. Feature extraction and pattern completion are mature

technologies. Human remembrance of

the past may also structure the interpretation of the graph.

The SI technology can be

exceedingly simple to use. The

event stream can be rendered with sounds (like background music), so that

humans might attend to other things and use only auditory acuity to sense the

event stream behavior in real time. The third layer is a knowledge management

system that connects members of a virtual community. Policy compliance is modeled and represented at the fourth

level.

The bottom layer of the

layered taxonomy is an open symbol

system depending on invariance types (atom and link categories) produced from

the aggregation (convolution) of computer data at selected points within an

instrumented system. An example of

an instrumented system is a system for processing Intrusion Detection System

audit logs. A second example is

data base access log files. A

third example is a sub-event log file produced while a text (conceptual search)

engine is finding correlation between textual elements (this is generalized LSI). In each case, the log file has the same

simple (editable) format.

The bottom layer of the layered

taxonomy is also a structure of sub-types related to the invariance that

compose events of interest to the human community. This structure, of sub-types, can be compared – using

grounded metaphor, with the “memory” of texture, color and form in a human

perceptional system. Formal

notations, on voting procedure (Prueitt) and quasi-axiomatic theory (Alex Citkin,

Victor Finn and Dimtri Pospelov), exist for autonomous aggregation of memory

(categorically linked invariance) of this type into cognitive graphs. These formal notations support advanced

methods that will be developed.

Used in this way, cA “memory” of invariance is aggregated into situated

ontology, ontology with emergent contextual scope that will appear as an

instant retrieval of information to the human.

The second layer of the

layered taxonomy is an event layer that

is responsive to a deployed infrastructure of human annotated event types and

to the visualization of pre-existing event patterns at the third level. This layer second can, in practical

ways, have algorithmic interactions with a cognitive graph type knowledge base.

Top down expectancies is thus

possible using any of a number of algorithmic methods (evolutionary

programming, adaptive resonance theory, or adaptive critics). The class of these expectancies is

compared with the connectionist scholarly work; by Werbos, Grossberg, Holland

and others; in automating recall using formal models of knowledge associative

memory. Our initial architecture

is a bit simpler than these classical algorithms, but a more sophisticated

associative memory will be implemented about midway into our 20-month

commitment.

The more sophisticated associative

memory will be developed under direction and consulting with Professors Daniel

Levine and Karl Pribram. Daniel

Levine is one of the leaders in the cognitive science / biological and

artificial neural network community (and PhD (1988) advisor to Dr.

Prueitt). Dr. Levine is a

professor of psychology at University of Texas at Arlington and has had several

decades of scientific interaction with Professor Pribram, Drs. Kugler, Murray

and Prueitt. Professor Karl

Pribram is Stanford Professor Emeritus (now at Georgetown University) and world

renown as one of the founders of the field of cognitive neuroscience. Both

Pribram and Levine are advisors to OntologyStream Inc.

The third level of the layered taxonomy is a knowledge management system having knowledge

propagation and a knowledge base system developed based on Peircean logics

(small cognitive graphs) that have a formative and thus situational aspect.

The fourth level of

the layered taxonomy is a machine representation of the compliance

models produced by policy makers.

Existing work

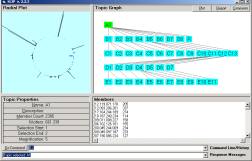

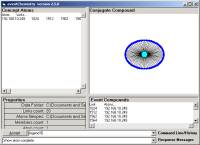

In Figure

3a, we show some of the early work on creating categories and rendering these

categories as visual abstraction.

Figure 3a shows the results of a feature extraction process

(scatter-gather on the surface of a sphere) that has produced a compositional

ontology having five layers. Each

of these elements in the compositional ontology can be viewed as an event

compound composed of elementary patterns of invariance and types of

relationships that this pattern has with other patterns. The compound has the nature of a

chemical compound composed of elementary atoms (of invariance type) and valance

(Figure 3b). Both elementary

number theory and category theory are used in the underlying formalism (again,

this formalism was developed by Drs. Prueitt, Murray and Kugler, with

scholarship citation to a foundational literature.)

a

b

c

d

Figure 3: Some of the early work on the

formation and visual rendering of cA

Great flexibility is provided for the fast assembly

of atoms (of invariance type) and link-types into small colored icons (Figure

3c and 3d). An in-memory data

structure is expressed or rendered directly in one pass over the structure.

This is considered a perception of the map. Evidence has been acquired that this in-memory map structure

has the nature of a hologram/fractal, in that partial (random) retrieval will

often look very similar to complete retrieval. Human information interaction occurs during incomplete

rendering, or when the event itself is only partially complete.

The integration of the

categoricalAbstraction based Synthetic Perceptual System (cA-SPS) with industry

standard metadata rich knowledge engineering systems is made via a translation

into machine-readable ontology (such as XML with RDF, KIF, or Cognitive Graphs

(CG)).

Innovative claims for the

research:

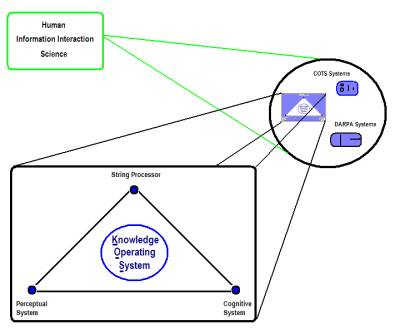

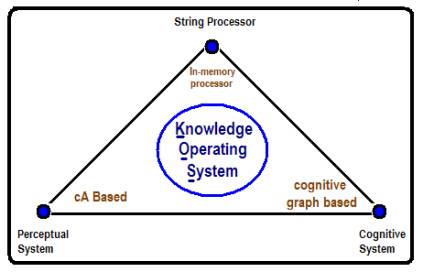

Knowledge Operating

System (KOS)

- Synthetic

Perceptual System (SPS): The main innovative claim is that a

“synthetic perceptual system” is able to extract relevant data from

massive sets of data utilizing a new approach that closely mimics the

human perceptual-linguistic system.

The architecture of the Synthetic Perceptual System is fully

prototyped and can be demonstrated as (1) computer information warfare

system, (2) operational systems for generalized Latent Semantic Indexing

for conceptual segmentation of full text, (3) a system that traces the

behavior of humans who access complex databases, and (4) a general event

detection machine that can be applied to electro-magnetic spectrum

analysis. A research-based

comparison has been made (by Prueitt) to modern cognitive science theories

(academic – Gerald Edelman, Karl Pribram, Daniel Schacter, Donald Hoffman,

Robert Shaw) on human memory, awareness and anticipation. Foundational elements from

set theory are used.

- Synthetic

Cognitive System (SCS):

As part of our development, we reviewed several COTS knowledge base

systems. We need only to build an API to one of these (cognitive graph)

systems and use the commercial system as a repository for small situated

ontology developed by the perceptual system and annotated by human

interaction. We present

computer architecture (with algorithms) for knowledge artifact retrieval

from a cognitive graph type knowledge repository based on hierarchical

structure and reciprocal processing (opponent processing (as in most

neural network architectures – Paul Werbos, Stephen Grossberg) and

re-entrant processing (Gerald Edelman)). We envision this hierarchical structure and reciprocal

processing as part of the synthetic perceptional system, noting that in

the human perceptional system a great deal of what might be called

cognition occurs (private communication, Karl Pribram). The cognitive graph based

Synthetic Cognitive System (SCS) is considered to be separate system,

whose primary function is to be a common repository of community knowledge

structures.

- Semiotic

String Processor: A

powerful, and yet simple, string processor was developed (2001- 2002) by

Don Mitchell. We consider

this string processor to be the kernel of the OntologyStream Knowledge

Operating System. Don

Mitchell is the Director of Software Development at OSI. He is committed full time to the

development of all aspects of the work that OntologyStream does –

including work performed by two additional software engineers. Dr. Prueitt has adequate program

management to coordinate this effort. Internally the Semiotic String Processor (SSP) receives

string commands over time, emits over time a series of change-events that

occur in internal data models, and enables binding of perceived

categorical abstractions directly to a data model. The SSP provides a convolution of

an inquiry as string-output of patterns of human behavior (during analytic

work using the KOS). This string is a chorography of the sign system

found to characterize the behavioral actions of a human in response to

perception of categorical invariance in the data set. The string processor

is the interface between humans and our synthetic perceptual and cognitive

systems. The core SSP engine

models human behavioral habits in interactions with the SPS and the

SCS. The focus is on allowing

the (human/synthetic) perceptual system to be the primary interface for

users. One purpose of the

string processor is to alter instrumentation and sensor parameters for

input of log files into the categorical abstraction engine. The second purpose is to control

the interactions between the knowledge base and the cA-based perceptual

system. The result of these

actions outputs a convolution over the data (log files) to produce

categories of invariance what are rendered visually, as sound, or as control

engines.

Innovations in Algorithms and Processing Architecture (IAPA)

- Fast

In-memory processing with no third party dependencies: Our team can

supply further innovation regarding the computational production of categorical

Abstraction (cA) and an In-Memory Referential Information Base (I-RIB) to

support the KOS system. The

In-Memory Referential Information Base allows our systems to access,

aggregate, and manipulate massive data sources more efficiently and quicker

than any conventional systems to date. This work is already completed by OntologyStream Inc

and can be demonstrated.

- Data

reduction: Categorical

abstraction is an absolutely critical innovation to address massive data

structuring/organization. The

synthetic perceptual system renders categories of invariance rather than

individual occurrences of data events. If a data event occurs just once, it is rendered as a

category. If the data event

occurs a billon times, it is rendered as a single category. This use of cA has been

discovered (by OntologyStream Inc.) to be able to “see” the event types,

and variations on event types, in the data and to produce visual icons

(small graph structures) that is the data (seen as organizational

categories.) The relevant

data is retrieved with 100% precision recall, and the icons can be used to

retrieve from data sets not involved in the event definition.

- Regularity

in data structure: Data

structure sufficient to the required computational processes has regularity

and predictiveness within context.

However, in most cases this regularity is not discovered and used

to express control flow.

Following certain new trends in interoperability and standards

(XML, RDF, KIF, Topic Maps) processes, we account for structural

regularity and predictiveness within context as part of the Knowledge

Operating System internal data formatting. The work by two software engineers will address the

enterprise-level software-standards essential to out of the box COTS

software. Software engineers

will develop this aspect of the architecture, under the direction of Drs.

Meyers and Prueitt.

Basic innovations entail several

surprising and beneficial features that will be substantiated during the

research project:

- The

system detects novel intelligence by design, without special effort to

prefigure or anticipate such results.

- Because

object invariance within large data sets can be shown to be fractal or

holographic, massive data sets can be randomly sampled yet still yield

effective results.

- The

combination of extremely efficient first-level processing, reliable

sampling, second level processing of compact abstractions, and potential

for parallel execution of all these processes, yields a system that can

scale up massively with no upper limit.

- The

ability to make meaningful distinctions improves with use. It is a

learning system.

- The

method and the software can be applied readily in many different domains

without elaborate set-up and reconfiguration.

- The

system can work completely independent from other systems, and requires no

third party software, thus increasing compatibility.

The work has been developed over a

period of twenty years. Our work

in this area fills a critical gap.

Intelligence vetting must be made both autonomous and automatic. Abstraction levels, with drill down, is

necessary if the policy makers of our representational democracy are to be

properly informed in real-time of what is in fact going on in the computational

spaces.

Our system provides the first

significant break through in human/computer anticipatory systems.

We argue that emergent categories

of data invariance, when harnessed with the human's perceptual system, can make

sense of massive data and efficiently find novel intelligence.

The process and software is to be

applied to several domains, such as textual intelligence, in which

informational novelty resides in massive data. We anticipate image-understanding techniques and have

strategies for acquiring data from large images databases.

The domain expert (i.e.

intelligence analyst) and the software component communicate via the

conjectural statement, images of the categorical abstractions, and annotations

attached to images. Data collected

by sensors and instrumentation is accumulated into an in-memory data

structure. The data structure in

memory is then processed to extract structures that are in turn served up to an

interpretation layer. An augmented librarian classifies the new abstractions,

places them in the visual library, and alerts analysts or response bots.

Analysts view aspects of the abstraction and may act on the item.

Interpretations are fed back to the system to modify visual cues and shared

memory. The man-machine system adapts and learns, producing general and

comprehensive models of events and relationship between events that can be

distinguished from other events.

Features of the system

- The

system is capable of detecting previously unknown types of events, as well

as events whose types are described in a shared library (knowledge base).

- Produces

abstractions and visualizations of anomalous events that are well suited

to human cognitive processing.

- The

interface will support multi-mode interaction, including voice and various

kinesthetic modes.

- The

system is immersive, in the sense of allowing humans to interact with

elements as if learning a language.

- The

human is NOT placed in the role of observer or consumer of an external

process.

- The

system puts the cognitive load on the analyst who learns to see meaning in

images of categorical abstractions.

- The

process is deterministic. Object, non-object boundaries are drawn with

formalized thresholds to produce ‘object recognition’.

- The

mastery (and construction of) new language is achieved by human action

within a community of practice.

- The

system supports knowledge sharing and employs peer-to-peer transmission

and collaborative architecture.

The first project milestone is to

acquire categorical abstractions from a data set, and to have the analyst

provide interpretations that are then shared. We will conduct experiments and make corrections and

enhancements to this unique system for specific application into the intelligence

domain.

Expectations

We expect

that the American government is serious about moving intelligence technology

towards Predictive Analysis and Anticipatory Mechanisms. There are significant cultural barriers

that agencies of the American government will have to address. However reaching into the science

community is the only way that these barriers can be moved aside.

Scientists in the fields of general

systems theory, cognitive psychology and human factors will study Human

Information Interaction (HII) with advanced knowledge technologies. But one can see that there is a

judgment about the deployed information technology. This technology does not have high fidelity to what is

known, and has been know for some decades, regarding the nature of human

natural intelligent and natural language.

Current generations of DARPA

knowledge representation technology have produced a number of high

quality ontology constructors and interfaces to machine ontology. These are now available to

purchase as COTS products. What has not been developed, at DARPA,

is a technology based on the derivation of categorical invariance over the real

time structure of data in computational spaces.

Figure 3: The High level View of a Knowledge Operating System

Natural

language learning and natural language use provides a different type of

cognitive (behavioral) emersion.

There is adequate scientific literatures and academic work in these

areas. However, these scientists

generally do not show an interest in DARPA developed technology (except as funded by

government programs). The core

business of OntologyStream is the properly grounded evaluation of knowledge

technologies based on HII research principles.

Scenario: A terrorist organization has assembled

four independent groups of computer hackers across the world. Each group is given a different task;

each task supports another group’s task. The interweaving of tasks creates a

well-coordinated and timed attack.

The first group’s task is to breach the internal networks of the U.S.

Power Grid Command and Control networks.

The second group is tasked to electronically break into five major U.S.

banks, and the Federal Reserve.

Meanwhile the third and fourths groups are “running interference” by

launching false or partial attacks on various government agencies and

facilities. Groups one and two

begin preparation for their attacks by doing basic Internet searches and

reconnaissance probes into the targeted networks. Given the distributed and well-coordinated nature of these

probes (and later on the attacks), the computer intrusion analysts see basic

scans and attack attempts, of which they see thousands of per day. There’s no reason for them to believe

anything particularly devious would be in the works, due to the limited scope of

each analysts sensor arrays and data.

Each analyst at each facility allows the system to automatically log the

attempts, but overlook them due to the routine nature of such attempts. Later, the attacks are carried out and

successful breaches of internal security at 90% of their targets are

successful, the Federal Reserve was not breached due to existing security

measures. Once the full attacks

were being launched, the computer intrusion analysts were unable to completely

repel the attacks because of the attack obfuscation and interference created by

groups three and four. The U.S. economic infrastructure is totally devastated,

hospitals are forced to run on emergency power, many die from the power outs,

and mass chaos ensues.

Had a system such as our proposed

synthetic perception system and categorical abstraction been employed

throughout this attack, along with a well-made event base, the outcome would

have been significantly different.

The system will have the ability to look at all the data from intrusion

detection systems nation wide, and identify patterns that would indicate a

coordinated attack. This

information would then be presented to the analyst for further review and

corroboration. Further, if the

coordinated attack patterns had been allowed unchecked by the human analyst and

the attack had still gone into effect, the system would have had the ability to

detect which were the “real” attacks, and which were mere attempts at

obfuscating the real attacks. This

would allow the nation as a whole to prioritize reaction to attacks, not by

what is being attacked, but whether it is believed to be the actual target or

not. Without this, the analyst

first instinct would be to protect major intelligence networks first, and leave

the banking and power systems lower down in priority, due to false attacks

launched on the intelligence networks.

Market Integration: The proposed synthetic perceptual

system is designed specifically with interoperability in mind. It can work

completely independent from all other systems, and requires no third party

software or modules. The independence of the system allows it to be implemented

with any number of modules or systems. Compatibility with any and all data

sources and systems will be achieved using relevant XML, RDF, KIF, XTM, and

upper ontology standards. The

stand-alone modules are lightweight, sometimes less than one megabit in file

size. These modules will exist as

Internet browsers. The modules can

address any data source.

A

perceptual system into the data invariance results in new human language use

and this language use can be mediated by knowledge base technology.

Outcomes

- Intelligence

operations effectively monitor massive data as a matter of course without

hitting processing constraints.

- Analysts

recognize the potential of this approach; commitments change.

- As

means are found to extract the full intelligence value from data, the

massive sunk cost in data collection apparatus begins to pay off.

Comparison To Other Ongoing Research:

The Cognitive Graph (CG) approach

has extended the principles of Charles Sanders Peirce's Existential Graphs,

Entity Relation Diagrams, Semantic Networks and XML type ontology

representation. Once in a CG,

various technologies provide a direct mapping from CGs to first order

logic. CGs is used in a number of

COTs systems to management n-ary relations in a novel way.

Architecturally we regard the cA

Synthetic Perceptional System (cA-SPS) is requiring a CG-Synthetic Cognitive

System (CG-SCS) having the form of a CG type standard knowledge base. We assume the responsibility for

integrating the CG-SCS and the cA-SPS and for studying the Human Information

Interaction between the combined Synthetic Intelligence (SI). The differences and similarities

between Artificial Intelligence (AI) and Synthetic Intelligence is subject to

some current science publications.

These publications include literatures on the new sciences or

quantum-neuro interfaces and nano-technology. The central authors, within this scholarship literature, are

Steward Hameroff (Univ of Arizona), Sir Roger Penrose (Cambridge University),

Karl Pribram (now at Georgetown, formerly at Stanford), Robert Shaw (University

of Connecticut), and others.

CG-type systems generally assume

that knowledge can be fully represented as tokens in logic and rules based on

these tokens. This assumption is

seen to have merit by many technologists and some cognitive scientists. However, this issue is in active

dispute by important foundational scholarship such as by Karl Pribram

(cognitive neuroscience) and Robert Shaw (ecological foundations to

perception).

In contrast, cA-SPS produces

small-situated ontology structures (easily converted to Peircean type CG)

through a very rapid reduction of the occurrences of patterns in massive data

sets. These small-situated

ontologies are not interpreted by the rules of a first order logic. The cA are visually rendered into a 2,

3 or n dimensional space; and the visual acuity of human observers accesses the

natural cognitive activities in the brain system. The sharing of knowledge about these small-situated

ontologies engages the natural exercise of judgment by a community of

practice.

The cA-SPS and artificial knowledge

representational systems (such as those that depend on CG) share a separation

of responsibilities. The cA-SPS

acts as an event perception algorithm that looks into the artificial world of

computer data. The CG systems can

take the form of the event representation and convert this into standard

machine ontology with first order predicate logics.

Key project members

- Kent

Meyers, PhD. SAIC scientist and program manager.

- Paul

Prueitt, PhD. (Ontology Stream Inc.)

Developer of the cA method and the software. Knowledge

scientist. Founder of OntologyStream. Technology evaluation and

knowledge technology patent specialist.

- Arthur

Murray, PhD. (OntologyStream Inc.)

Knowledge Engineer and Human Factors Expert. Director of the George Washington

University Knowledge Management Program.

- Peter Kugler, PhD (OntologyStream

Inc.) Former Eminent Scholar of the Commonwealth of Virginia, General

Systems Theorist and Human Factors Expert. Member of the ecological psychology community (centered

at University of Connecticut)

- Dean

Rich. (Cyber-Security

Inc.) Officially designated

“White Hat” expert in computer attacks. Serves at initial domain expert. Has extensive experience with

information warfare.

- Don

Mitchell (OntologyStream Inc.)

Director of Software Development.

OntologyStream Advisors

- Dr.

Daniel Levine (University of Texas at Arlington)

- Dr.

Karl Pribram (Georgetown university, Sanford university Professor Emeritus

- Dr.

Robert Shaw (University of Connecticut)

- Dr.

Alex Citkin (BCNGroup)

- Dr.

Fiona Citkin (BCNGroup)

- Dr.

Richard Ballard (Knowledge Foundations Inc.)